The current generation of AI agents has made significant progress in automating backend tasks such as summarization, data migration, and scheduling. While effective, these agents typically operate behind the scenes—triggered by predefined workflows and returning results without user involvement. However, as AI applications become more interactive, a clear need has emerged for agents that can collaborate directly with users in real time.

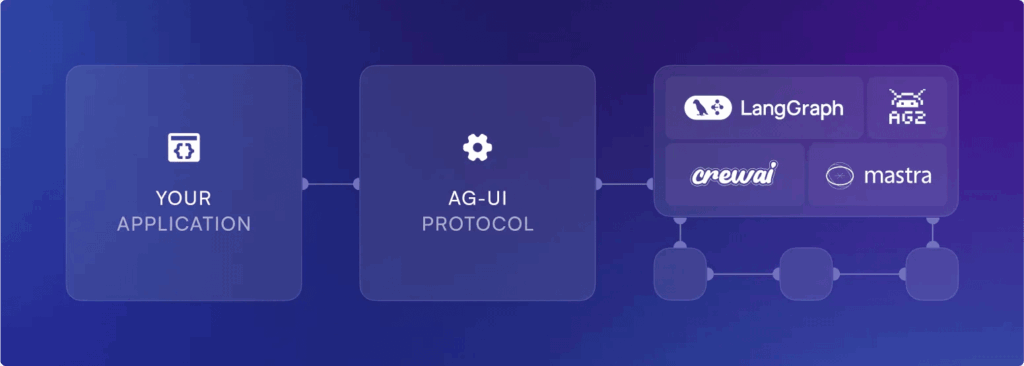

AG-UI (Agent-User Interaction Protocol) is an open, event-driven protocol designed to address this need. It establishes a structured communication layer between backend AI agents and frontend applications, enabling real-time interaction through a stream of structured JSON events. By formalizing this exchange, AG-UI facilitates the development of AI systems that are not only autonomous but also user-aware and responsive.

From MCP to A2A to AG-UI: The Evolution of Agent Protocols

The journey to AG-UI has been iterative. First came MCP (Message Control Protocol), enabling structured communication across modular components. Then A2A (Agent-to-Agent) protocols enabled orchestration between specialized AI agents.

AG-UI completes the picture: it’s the first protocol that explicitly bridges backend AI agents with frontend user interfaces. This is the missing layer for developers trying to turn backend LLM workflows into dynamic, interactive, human-centered applications.

Why Do We Need AG-UI?

Until now, most AI agents have been backend workers—efficient but invisible. Tools like LangChain, LangGraph, CrewAI, and Mastra are increasingly used to orchestrate complex workflows, yet the interaction layer has remained fragmented and ad hoc. Custom WebSocket formats, JSON hacks, or prompt engineering tricks like “Thought:\nAction:” have been the norm.

However, when it comes to building interactive agents like Cursor—which work side-by-side with users in coding environments—the complexity skyrockets. Developers face several hard problems:

- Streaming UI: LLMs produce output incrementally, so users need to see responses token by token.

- Tool orchestration: Agents must interact with APIs, run code, and sometimes pause for human feedback—without blocking or losing context.

- Shared mutable state: For things like codebases or data tables, you can’t resend full objects each time; you need structured diffs.

- Concurrency and control: Users may send multiple queries or cancel actions midway. Threads and run states must be managed cleanly.

- Security and compliance: Enterprise-ready solutions require CORS support, auth headers, audit logs, and clean separation of client and server responsibilities.

- Framework heterogeneity: Every agent tool—LangGraph, CrewAI, Mastra—uses its own interfaces, which slows down front-end development.

What AG-UI Brings to the Table

AG-UI offers a unified solution. It’s a lightweight event-streaming protocol that uses standard HTTP (with Server-Sent Events, or SSE) to connect an agent backend to any frontend. You send a single POST to your agent endpoint, then listen to a stream of structured events in real time.

Each event has:

- A type: e.g. TEXT_MESSAGE_CONTENT, TOOL_CALL_START, STATE_DELTA

- A minimal, typed payload

The protocol supports:

- Live token streaming

- Tool usage progress

- State diffs and patches

- Error and lifecycle events

- Multi-agent handoffs

Developer Experience: Plug-and-Play for AI Agents

AG-UI comes with SDKs in TypeScript and Python, and is designed to integrate with virtually any backend—OpenAI, Ollama, LangGraph, or custom agents. You can get started in minutes using their quick-start guide and playground.

With AG-UI:

- Frontend and backend components become interchangeable

- You can drop in a React UI using CopilotKit components with zero backend modification

- Swap GPT-4 for a local Llama without changing the UI

- Mix and match agent tools (LangGraph, CrewAI, Mastra) through the same protocol

AG-UI is also designed with performance in mind: use plain JSON over HTTP for compatibility, or upgrade to a binary serializer for higher speed when needed.

What AG-UI Enables

AG-UI isn’t just a developer tool—it’s a catalyst for a richer AI user experience. By standardizing the interface between agents and applications, it empowers developers to:

- Build faster with fewer custom adapters

- Deliver smoother, more interactive UX

- Debug and replay agent behavior with consistent logs

- Avoid vendor lock-in by swapping components freely

For example, a collaborative agent powered by LangGraph can now share its live plan in a React UI. A Mastra-based assistant can pause to ask a user for confirmation before executing code. AG2 and A2A agents can seamlessly switch contexts while keeping the user in the loop.

Conclusion

AG-UI is a major step forward for real-time, user-facing AI. As LLM-based agents continue to grow in complexity and capability, the need for a clean, extensible, and open communication protocol becomes more urgent. AG-UI delivers exactly that—a modern standard for building agents that don’t just act, but interact.

Whether you’re building autonomous copilots or lightweight assistants, AG-UI brings structure, speed, and flexibility to the frontend-agent interface.

Check out the GitHub Page here. All credit for this research goes to the researchers of this project.

Thanks to the Tawkit team for the thought leadership/ Resources for this article. Tawkit team has supported us in this content/article.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of Artificial Intelligence for social good. His most recent endeavor is the launch of an Artificial Intelligence Media Platform, Marktechpost, which stands out for its in-depth coverage of machine learning and deep learning news that is both technically sound and easily understandable by a wide audience. The platform boasts of over 2 million monthly views, illustrating its popularity among audiences.